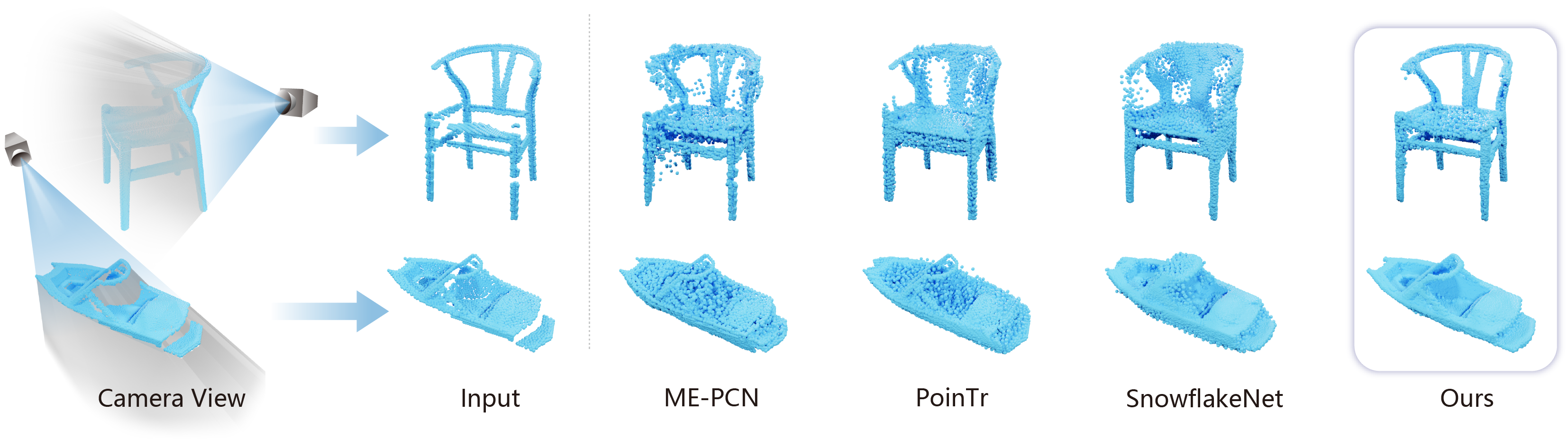

Fig. 1. Given a single-view partial scan, our method considers the volume that is shadowed by the observed points and generates more complete and clean results compared with ME-PCN, PoinTr and SnowflakeNet.

Fig. 1. Given a single-view partial scan, our method considers the volume that is shadowed by the observed points and generates more complete and clean results compared with ME-PCN, PoinTr and SnowflakeNet.

Single-view point cloud completion aims to recover the full geometry of an object based on only limited observation, which is extremely hard due to the data sparsity and occlusion. The core challenge is to generate plausible geometries to fill the unobserved part of the object based on a partial scan, which is under-constrained and suffers from a huge solution space. Inspired by the classic shadow volume technique in computer graphics, we propose a new method to reduce the solution space effectively. Our method considers the camera a light source that casts rays toward the object. Such light rays build a reasonably constrained but sufficiently expressive basis for completion. The completion process is then formulated as a point displacement optimization problem. Points are initialized at the partial scan and then moved to their goal locations with two types of movements for each point: directional movements along the light rays and constrained local movement for shape refinement. We design neural networks to predict the ideal point movements to get the completion results. We demonstrate that our method is accurate, robust, and generalizable through exhaustive evaluation and comparison. Moreover, it outperforms state-of-the-art methods qualitatively and quantitatively on MVP datasets.

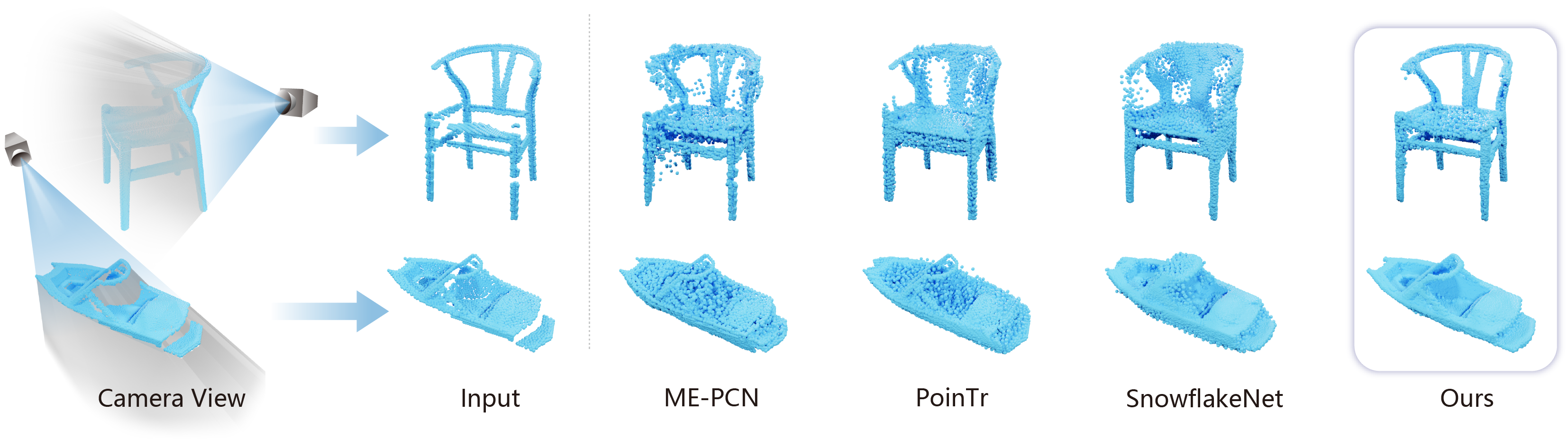

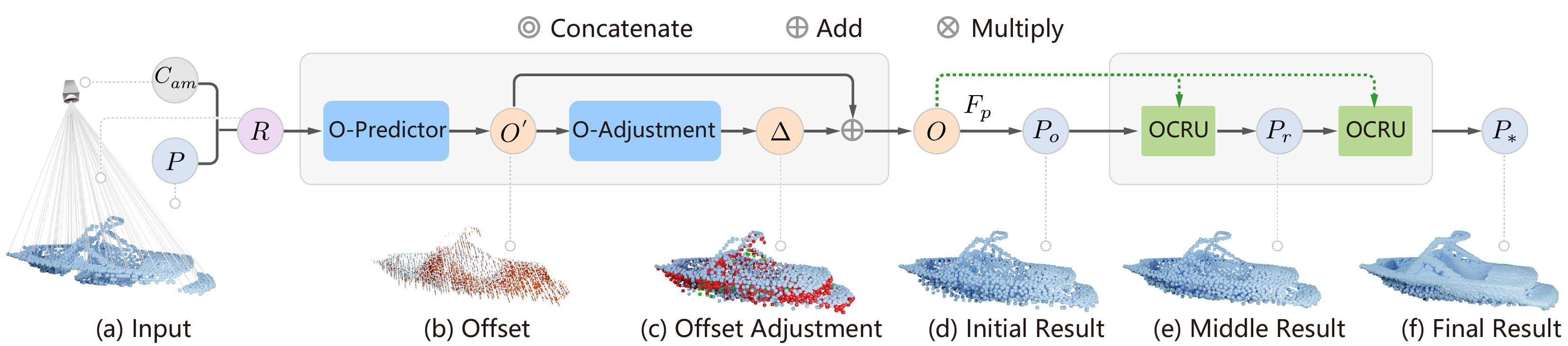

Our method considers the camera a light source that casts rays toward the object. We divide the whole space into two parts, the forbidden volume and the shadow volume, the forbidden volume is the spatial area that cannot do point cloud completion, instead we should complete the partial point cloud within the shadow volume. To achieve this, we formulate the completion process as a point displacement problem. Points are initialized at the partial scan and then moved to their goal locations with two types of movements. First, we put multiple points on each observed point and move them along the ray to produce the initial guesses of the complete geometry. Second, we split each red point into multiple blue points and move them within constrained local neighborhood. We design a neural network to do this automatically. The network includes two main modules: the offset prediction module and the offset-constrained refinement.Given the camera location and partial scan as input, the offset prediction module is designed to predict the movement along rays, and the offset constrained refinement module estimates the local movement.

Fig. 2. The completion process.

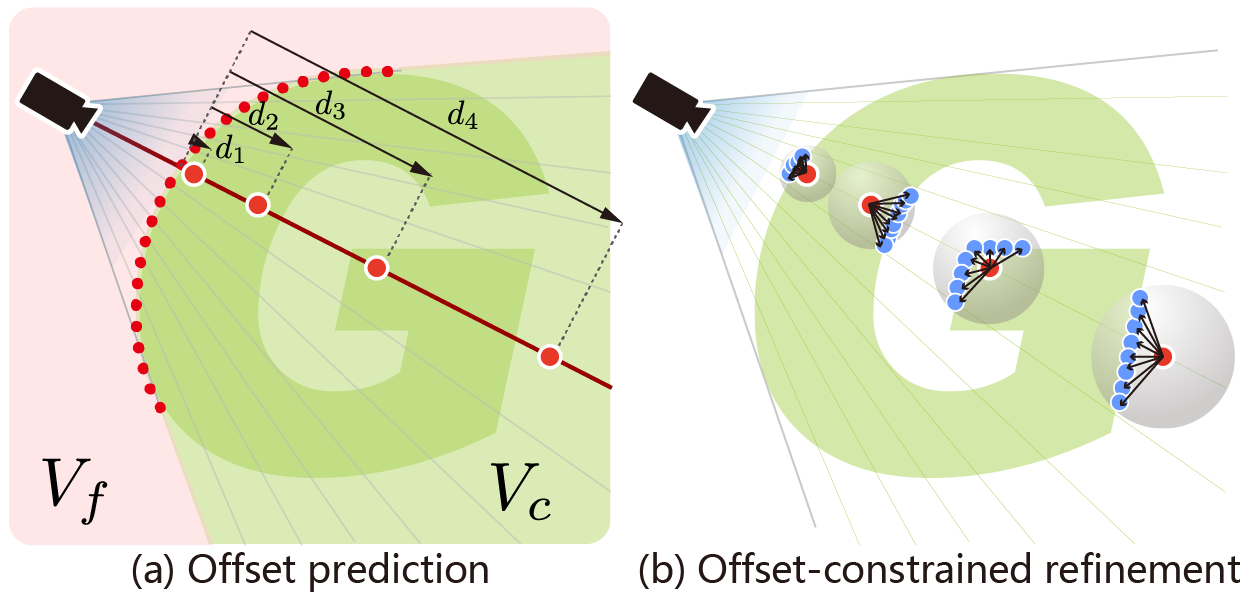

Fig. 3. The overview of our method. Given the camera location and partial scan, we compute a batch of rays that is the network's input(a). For each ray, we first predict offset $O'$ that is visualized by lines in (b), and then predict an adjustment for each offset that is visualized in (c), where green points represent positive adjustment; red points represent negative adjustment. With the initial completion result(d), we further apply a two-step refinement to get a smoother result (e) and final result (f).

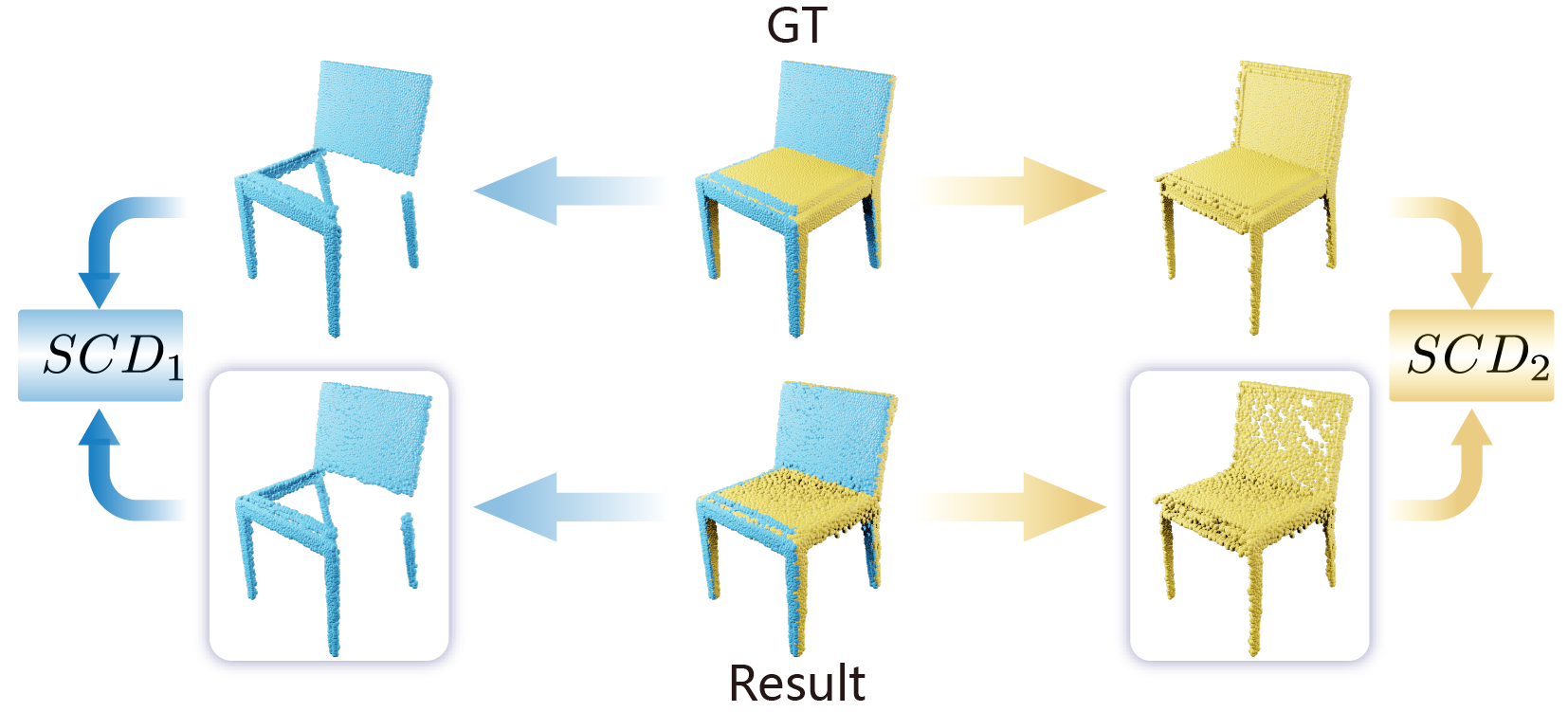

We use chamfer distance, F-Score and Density-aware chamfer distance to evaluate the completion results. While these metrics cannot evaluate completion quality of visible part and missing part, because they are indiscriminative to the two parts, which suggests that they lack granularity. To provide sufficient details on the completion quality, we propose a new metric named “split chamfer distance”, that includes two values, SCD1 and SCD2. SCD1 is used for evaluating the completion quality of visible part. SCD2 is used for evaluating the completion quality of missing part.

Fig. 6. The computation of $SCD_1$ and $SCD_2$.

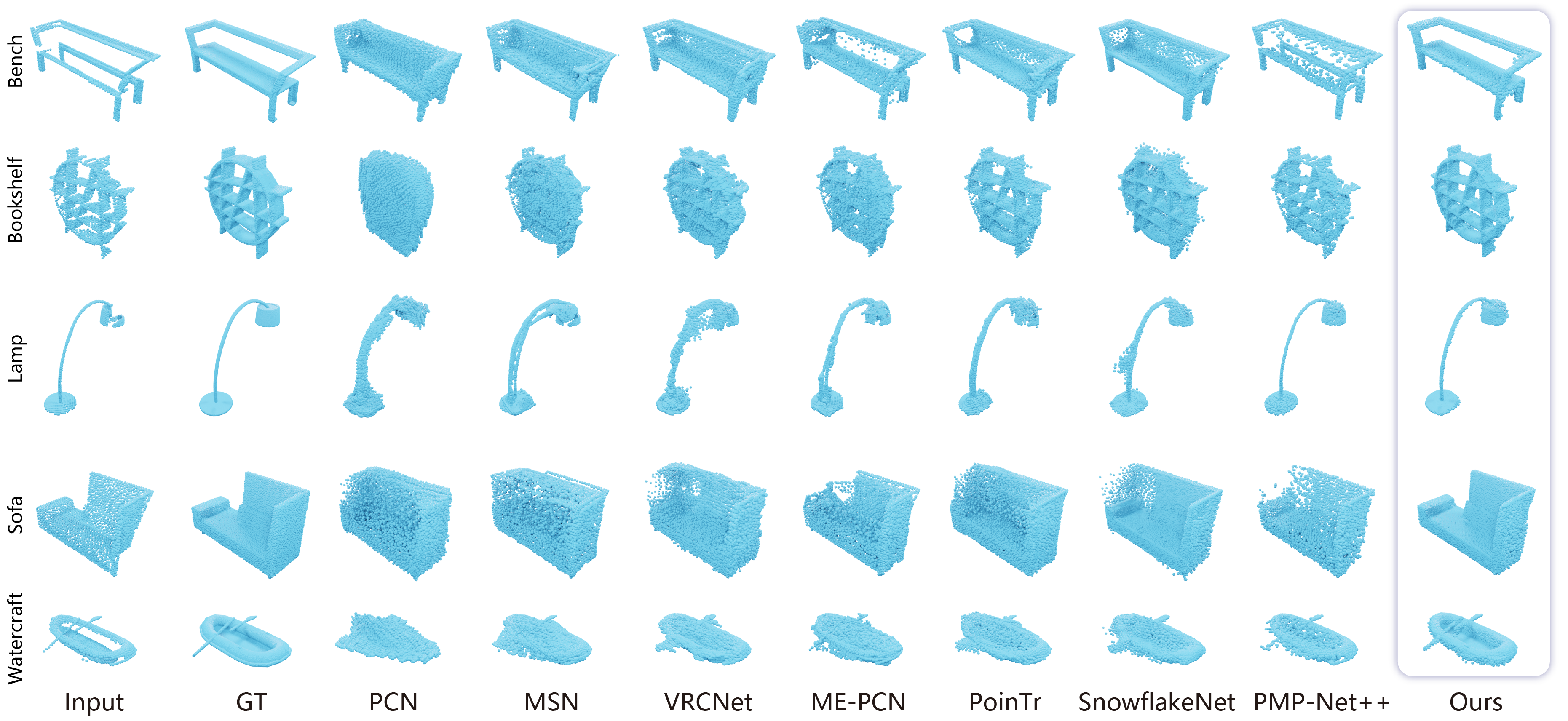

We compare our completion results with other methods. In general, our completion results are cleaner than others’ and retain more geometry details in the partial scan.

Fig. 7. Visualized completion results for comparison on MVP dataset.

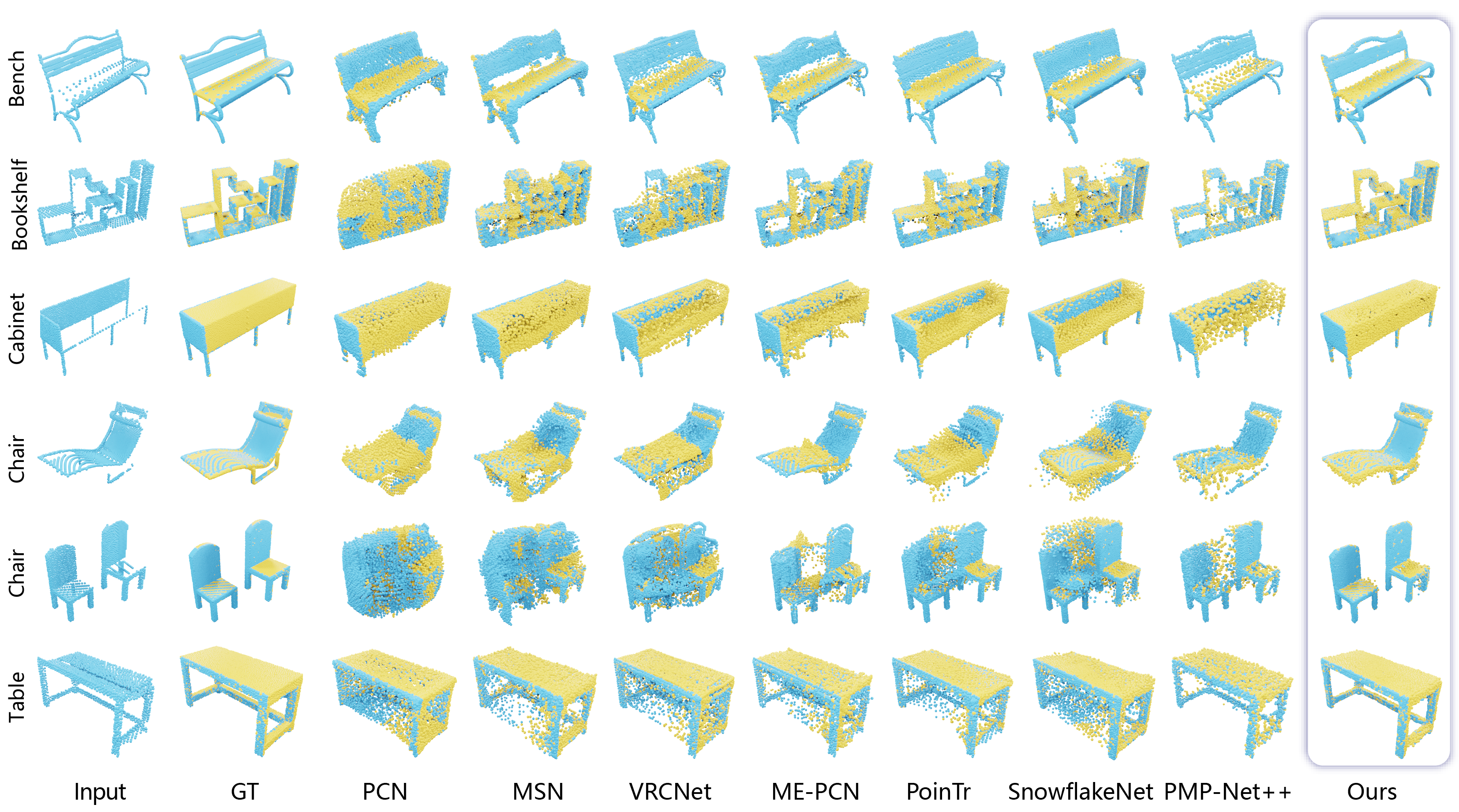

Fig. 6.(in supp) The completion results are visualized with two parts for comparison. The blue points show the completion for observed part, while yellow points show the completion for unobserved part.

The source code and data used in this paper can be found in the following link.

link: https://github.com/zhangbowen-wx/Shape-Completion-with-Points-in-the-Shadow

This work was supported in part by National Natural Science Foundation of China (62072366, 61872250), Key R&D project of Shaanxi Province (2021QFY01-03HZ), China Postdoctoral Science Foundation Funded Project (2020M673407), Guangdong Natural Science Foundation (2021B1515020085) and Shenzhen Science and Technology Program (RCYX20210609103121030).